Value Vector

IoT Security in the Real World – Part 2: Enter The Cloud

Jesse DeMesa

Overview

In Part 1 of this series (IoT Security in the Real World – Part 1: Securing the Edge) we looked at the IoT Security Landscape from the perspective of an “Edge” device sitting out in the field. In this posting, we will shift our focus up into the Cloud, where an entirely different set of risks and concerns present themselves. Only by viewing the end-to-end landscape can we truly begin to address the concerns in IoT Security.

NOTE: This post also happens to be based on a real Security Assessment pitch I put together for a client of Momenta Partners.

Our Deployment Environment

We will continue to use the example scenario we laid out in Part 1 – The Oil Field. We have already introduced a Gateway device into our operating environment near the Well Head, so we have data flowing up into the Cl oud.

oud.

In this posting we will review the Cloud-side security impact of the following setup:

- A proprietary piece of “Agent” Software running on a series of Gateways that send data and receive commands from the Cloud

- An Amazon Web Services (AWS) hosted IoT Cloud platform

Since there is so much to cover in the Cloud, I have decided to break this topic up into two separate articles, with this posting focusing on the layer of the Cloud where the Data from the Edge first is received. In Part 3 we will take a look at the Security concerns on the Data, API and User Management side.

Attack Surfaces Overview

First, let’s review the 3 “tiers” within the IoT stack:

- Edge: Everything at the “Edge” of the Network, where Data is being Acquired.

- Transport: The communication channel used to move data from one place to another

- Cloud: The Server components that receive data and allow remote Users to interact with the Edge devices.

For this posting we will review the Attack Surfaces which were introduced in the Cloud once we introduced a connected Gateway into the Well Head environment.

For the sake of this discussion, we will pretend we leave in an ideal environment where Connectivity options are stable and readily available, and where the local environment around the Well Head has been completely secured. We’ll further assume that TLS is in play (either as part of HTTPS or paired with TCP directly for something like MQTT).

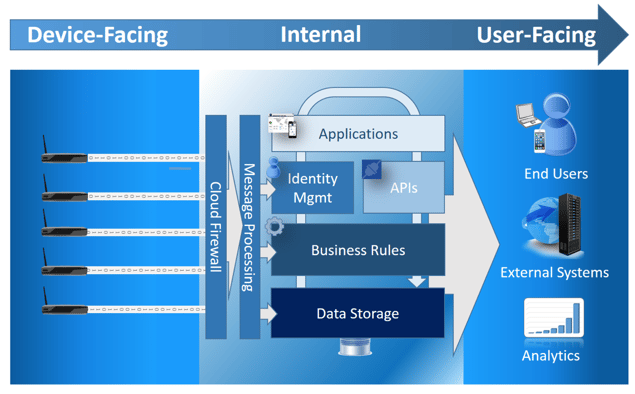

Here we have a breakdown of the core functions of your standard IoT platform. This may represent a single monolithic offering (PLAT.ONE, ThingWorx, Davra), a series of Component IaaS services (Azure IoT, AWS IoT), or a hybrid of COTS and proprietary technology.

Let’s review these core components, and then take a look at the Attack Surfaces within each:

Cloud Firewall

We often ignore, or take for granted the requisite Firewall / Switch / Router infrastructure. In the case of an IaaS Solution, this tier may be hidden behind a relatively simple UI facade, or in the case of a Solution Provider it may be completely transparent.

The IoDoS?

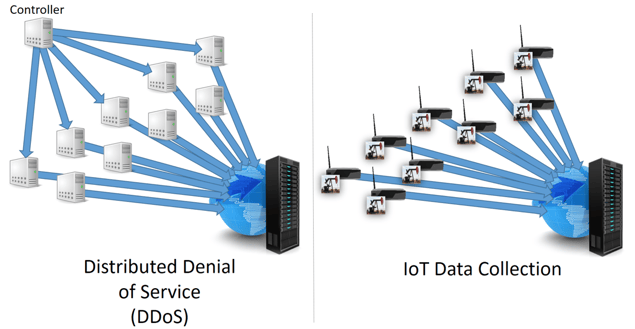

Back in my days at Axeda Corp. (now PTC), it was frequently pointed out that an IoT solution in production often generates a traffic profile that looks remarkably like a Distributed Denial of Service attack (DDoS). Numerous endpoints will be sending in large volumes of what can look like nearly identical data.

In a normal operating mode, this can be managed through tweaking configurations related to number of inbound connections, aggressive timeouts, and horizontal scaling.

Where this tier becomes critically important, however, is when one or more of the data-generating Edge devices has entered into an operating mode where it is generating data constantly. This can be due to a misconfiguration, an exploit, or an unexpected condition that triggers a loop. In this case, it may become necessary to “mute” one or more of these extremely chatty Edge devices.

In this scenario, it is critical to stop this traffic as close to the “front” of the stack as possible, to mitigate any impacts that may occur within the rest of the solution. If one of these “stuck” devices is allowed to begin flooding the Message Processing bus, or the Business Rules engine, then we have a real Denial of Service situation on our hands.

The Syn of Omission

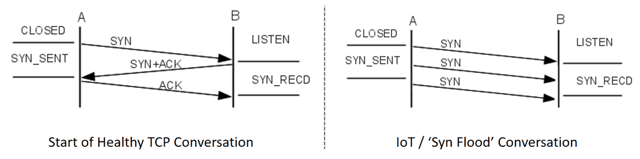

One of the biggest assumptions that we make when setting up the Cloud component of an IoT solution is that we have a healthy TCP/IP stack at the Edge. In reality, different communication methods with carry with them very different profiles for communication.

Take our cell-connected Gateway for instance, it is highly unlikely that it will be able to maintain a persistent and healthy TCP/IP stack across the badlands of West Texas. What is more likely to happen is an unpredictable set of drops, timeouts and retransmissions of the IP data.

I witnessed this firsthand with a customer that was moving connected vending machines from WiFi to Cell connectivity, and was hosting their own infrastructure. What they witnessed was a high degree of packet and message loss from the edge and I was brought in with some other to investigate. What we saw was that the rules that were in place by default in the Firewall to protect against a “Syn Flood” attack were responsible for the message drops.

In this instance, the Cell traffic would cause numerous TCP “conversations” to be started due to packet drops from the Edge.

In this instance it was necessary to make modification at the Firewall/Router tier to account for the altered IoT traffic pattern. This trade-off must be well considered, since Syn Flood attacks are a very real threat, and other measures should be put in place to detect and mitigate that issue.

Known Unknowns, or “Who is This”?

Much like we see in the DDoS style of attack, the producers of data (perhaps wrongly labeled the “client” in many worldviews) with an IoT Ecosystem will not necessarily come in from known and stable IP addresses. In many cases, there will only be a routable return path for communication, with no way to directly “reach out” to touch or validate a Device.

Let’s quickly take a look at some common strategies for trying to Identify a Device whose IP address is ephemeral:

- Request Parameter / Header / Request Body

In many solutions, including straight RESTful implementations, COAP and most proprietary protocols the Device ID is embedded directly inside the Message or components of the Request. This seems like a valid design decision, and over plain-text transports this is a valid approach.

Once you introduce SSL (using TLS), you make everything except the domain of the Request (not the full URI) completely opaque. This means that in order to validate the Device Identity you will need to offload SSL (either in the switch or further upstream) before you can even access the ID component of the message.

This is possible, but this exposes another Attack Vector, in the form of spurious requests being flooded in to the SSL offloading framework.

- SSL Client Certificates

This is by far the most secure of the common IoT deployment solutions. In this strategy you have a unique Cert distributed to each of the Devices in the field. This provides a mechanism to prevent communication from un-approved Devices, since the SSL handshaking will not succeed, preventing any messages from flowing upstream to other components. Since the bad cert can be detected by the front end, it is possible to “Blacklist” the IP addresses responsible for failed client cert requests.

Now, this is practical for larger footprint IoT Devices, and for organizations that already possess the deployment and provisioning infrastructure to take on this burden it will may big dividends.

- Named Queues

For any implementations using AMQP, MQTT or any other Message Queue based protocol, there is a lot of flexibility provided to segregate traffic. This can be based on Topics, Queues, or other classification components that allow Messages to be ingressed, processed, stored or forwarded based on the needs to the Device and message type.

Since a new Topic or Queue is essentially free (I know, there are limits to everything, but the processing overhead for reasonable numbers of queues and topics is well within the specs of most Message Bus instances), new Devices can be assigned to a specific Queue or Topic to limit the scope of the messages.

The judicial use of named Queues can allow traffic from new Devices to flow into ephemeral storage until such time as the Device is authorized to produce real data.

Message Processing

Message Ingress

We’ll assume that the Firewall / Switch / Router infrastructure is in an ideal state and properly securing the inbound traffic. We now assume that all traffic making its way through the Firewall is indeed sourced from our own Devices, or by entities that look, act and feel like them from a traffic perspective.

Probably the most diverse component of IoT solutions is the Protocol which is chosen to communicate the data from the Edge up to the Cloud. In the original World Wide Web proliferation, we agreed on a series of protocols and specifications at different levels to enable the interaction of distributed machines. TCP/IP->HTTP->HTML, with a sparkling of DNS was enough to drive the network effect and start the explosion.

Sadly, in the current fractured state of IoT, we can’t even come to agreement on the style of communication that best suits IoT. This may in fact be a blessing, as not all Devices will fit a homogeneous profile.

The first piece of the Message processing problem we’ll refer to as “Message Ingress”, meaning the initial receiving and processing of messages. Let’s take a look at a few of the patterns of Ingress and their inherent risks.

Transactional

All RESTful communications will fit into this model. A connection is established to a specific URI, a Request is made, the client will remain blocked until a Response is returned, and that exchange is complete. There may be multiple requests made across a single connection, and many across a single Session.

- Benefits: Easily tested; Near ubiquitous support.

- Risks: VERY tight coupling between the Client and Server. Numerous exploits available, particularly prone to Discovery / Probing style of attacks.

Pub / Sub

MQTT / AMQP fit cleanly into this model. With this approach the Client and Server both produce (Publish) and consume (Subscribe) messages to/from a Queue. This provides a completely asynchronous connection between the Client and the Server. That maximizes the decoupling effect, at the expense of additional infrastructure cost, and a slight latency bump.

- Benefits: Also very testable; Decouples Server and Client, which helps in scalability

- Risks: Dynamic Queue/Topic creation exposes a new Attack Vector in the form of Queue/Topic explosion; Once a message queue is opened, it is typically not bound to a specific IP address, exposing an Imposter Attack Surface

Persistent / Streaming

With the increased support for WebSockets, we have seen a significant increase in the number of IoT Devices supporting Persistent Streaming style protocols for communicating with the Cloud. This provides by far the lowest latency and easiest “contactibility” for the connected Devices.

This approach is typically found in proprietary protocols, and can even allow for remote tunneling, file transfer, and even streaming audio/video directly from the Devices.

- Benefits: Lowest possible latency; Enables streaming content

- Risks: Very high overhead in terms of concurrent connection management; Exposes a network-level DoS Attack Vector since the connections remain up

Conclusion (Part 2)

The most obvious Attack Surface presented by an IoT Cloud stack is where the Edge first meets the Cloud. By its very nature, the flood of traffic flowing in from the fleet of IoT Devices looks and feels very much like an attempted Security Exploit, and proactive steps must be taken to recognize the valid connections and data from the possible onrush of intruders. There are some key strategies that can be implemented to help minimize this risk, including the use of Client Certificates, dynamic White Listing of valid IP addresses, and explicit “Mute” controls for Devices that are determined to be either faulty or malicious.

In the next article in this series, we will address the Security concerns that arise from Data at Rest, User Management, API and Application privileging, and integrations.