Value Vector

IoT Security in the Real World Part 3: The Cloud and The Human Machine

Jesse DeMesa

Overview

In previous parts of this series (IoT Security in the Real World – Part 1: Securing the Edge, and IoT Security in the Real World – Part 2: Enter The Cloud) we looked at the IoT Security Landscape from the perspective of an “Edge” device sitting out in the field reporting data, and the “front end” of a Cloud implementation receiving and digesting that data.

In this posting, we will continue our focus on the Cloud, this time focusing on the Cloud platforms, the Users that leverage them, and the introduction of new and unexpected Attack Surfaces. In conjunction with Edge security, and the ingress into the Cloud, reviewing your Cloud back end will help to provide an end to end view of IoT Security.

NOTE: This post also happens to be based on a real Security Assessment pitch I put together for a client of Momenta Partners.

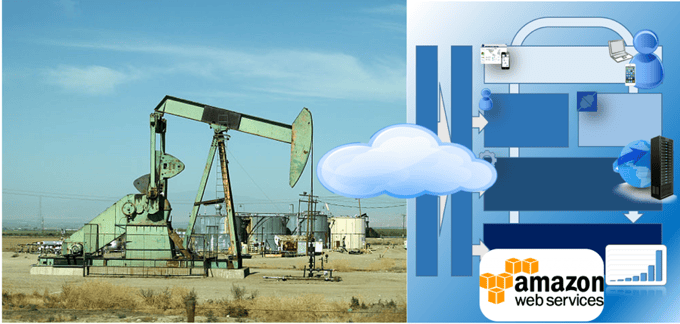

Our Deployment Environment

We will continue to use the example scenario we laid out in the first two parts of this series. We have already introduced a Gateway device into our operating environment near the Well Head, so we have data flowing up into the Cloud, we have secured that data as it flows into the Cloud, and we have reviewed the risks inherent in trusting the data flowing in from numerous distributed devices.

In this posting we will review the Cloud-side security impact of the following setup:

- A proprietary piece of “Agent” Software running on a series of Gateways that send data and receive commands from the Cloud

- An Amazon Web Services (AWS) hosted IoT Cloud Platform where the Ingress bus is trusted (let’s say we’re using Amazon’s SQS and have properly secured it)

- A variety of Clients that need to interact with our Cloud Platform, both human and programmatic

Attack Surfaces Overview

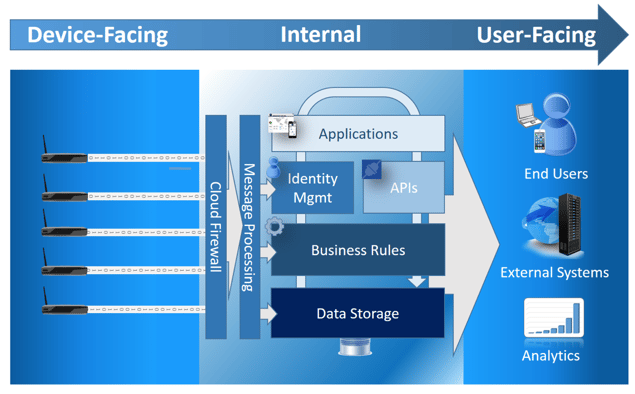

First, (and for the final time) let’s review the 3 “tiers” within the IoT stack:

- Edge: Everything at the “Edge” of the Network, where Data is being Acquired.

- Transport: The communication channel used to move data from one place to another

- Cloud: The Server components that receive data and allow remote Users to interact with the Edge devices. This in turn is comprised of a set of tiers:

- Rules: The conditional behavior of the Cloud Platform in response to various triggers

- Data Storage: The “home” for data after it has been ingressed

- Applications: The functional components that provide Business Value to the clients

For this posting we will review the Attack Surfaces which were introduced in the Cloud once we started to leverage the data from, and access to, the Gateway located in the Well Head environment. It is important to note that I will not be addressing the standard externally-facing IT security concerns like Firewalls, SSH & SSL, as they are rather well addressed in other posts.

For the purposes of discussion, we will re-use the diagram introduced in Part II of this series to describe the various functional components of the Cloud stack.

Identity Management

The first Attack Surface presented by the IoT Cloud stack is where the Devices at the Edge first meet the Cloud. This is true both the first time a Device communicates, as well as during all subsequent conversations. Let’s take a look at these two very different Use Cases:

First-Time Communication: Registration / Provisioning

In an IoT scenario, the Cloud needs to become aware of the “Device” at the Edge, and needs to create a representation of this Device up in the Cloud. Amazon refers to this cloud representation as the “Device Shadow”, PTC refers to it as the Device’s “Digital Twin”, but in all cases we are talking about a virtual representation of a physical Device.

This “Shadow” or “Twin” can either be created before the Device ever communicates with the Cloud, in Real-Time when the Device first communicates, or post-facto after the Device has already communicated, and some other process either creates the “Twin”, or maps the Device to a Twin.

Since were going to throw around a lot of terms while discussing this topic, let’s define a few of these before we get started:

- Twin: The Cloud representation of the Device

- Registration: The act of a Device communicating into the Cloud for the first time to announce its identity

- Provisioning: The process of adding a Twin to the Cloud platform

Registration – Convenience vs. Security

The act of setting up an IoT Device, particularly in an industrial setting like our Well Head, is typically performed by a Field Service Engineer, whether sourced from the Customer directly, hired as part of a Solution Integrator, or even from the IoT Cloud vendor.

This process is usually scripted, using tools provided by the IoT Solution Provider, but the level of training on the specific technology being deployed may vary greatly from site to site. As a result, it is often desirable to simplify and expedite the process of Registration.

This can come with significant risks if the Registration process is left open without suitable validation steps. Some of the most significant possible Attack Vectors are:

- Redeemed Token Registration: In many implementations there is a tighter control on the Identity Management, but the ability to Register is still decoupled from the actual production of the Device. This may be the case with a home Thermostat, where the Customer will be given a single-use Token (such as a “Product Key”) which will be consumed during the Registration process.

- Open Registration: Many IoT Platforms by default allow all incoming Registration attempts, regardless of the Device Identity used. In this scenario, the Device simply phones home and “announces” its Identity, which is then added to the Cloud Platform as one of the known “Devices”. This allows the Field Engineer to register Devices ad-hoc, as necessary.

- Pre-Provisioned Registration: The most Secure Registration process involves incorporating the Device Identity into the Cloud Platform before the Device ever reaches the field. This can happen via using Certificates for the Device Identity, using a Hardware based Identity (such as an HMAC), or via generating the Identity at the factory.

Business Rules

Almost all IoT Platforms contain some capability for automated behavior to be configured via a series of “Rules”. Although this may not be an obvious Attack Vector, it provides a way for inputs from the Device to influence the behavior of the Cloud platform.

Let’s consider the following Business Rule in our Well Head solution:

- When the reported Temp is greater than 100 degrees Fahrenheit,

- Send an SMS to the associated Field Engineer

- Create a Service Ticket in the CRM system (like a ServiceMax or SalesForce)

- Tell the Pump to lower its speed

This is a very common scenario, wherein a single reported out-of-bounds condition will trigger a workflow that tries to automatically adjust the Device to stop the condition as well as begin a workflow that brings the issue to the attention of the Service personnel.

NOTE: We will assume that once the condition is true, the rule will not trigger again until the condition has become false.

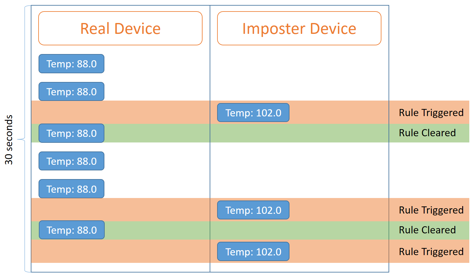

This configuration creates a straightforward Attack Vector for exploiting the Logic. Let’s first attack the rules using an Imposter style attack where we have a “fake” Device with the same Identity posting data:

In this attack, we see three Rule triggers in a short timeframe, which, per our configuration will trigger 3 SMS messages, 3 records in SalesForce, and will give everyone in the loop the impression that the Device is having serious temperature instability.

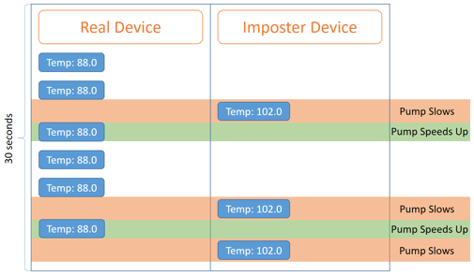

This behavior in itself is more of an annoyance than it is a danger. The real concern arises from the automated control message which is sent back down to the pump at the Well Head. Let’s revisit the same scenario from the perspective of the Well Head itself:

This has an obvious physical effect on the Pump itself, in fact one that is very reminiscent of the StuxNet attack (https://en.wikipedia.org/wiki/Stuxnet) where a false reported sensor value was used to cause damage to centrifuges.

This is an important Attack Vector due to its simplicity, since the attacker never had any access to the Well Head site, never compromised any of the solution infrastructure, never cracked a single User/Pass, yet they are able to physically impact the Device itself.

While this problem is easiest to solve through very rigid Device Identity management, it can also be mitigated via more considered use of conditions in the Rules, in particular re-evaluation or “de-bouncing” to remove the “spikes”.

Users, Groups and “Over-Privileging”

Once you have a fleet of Devices connected to the Cloud system, there will be a number of Users which will need access to the Data, as well as the ability to send commands down to the Devices.

The most common approach for managing this solution is to create a hierarchy of Groups and Roles that will inherit various capabilities. This will keep the right Users looking at the right Data, correct? In theory (and in practice), this model provides the raw materials necessary to create the correct Group structure.

This approach commonly breaks down into two major distinctions, Visibility (what one can see) and Capability (what one can Do).

User Groups as an Attack Vector

Where we see a possible Attack Vector emerging is when, through oversight or just plain laziness, a Group or User is grossly “Overprivileged” in terms of Capability or Visibility. We have seen this situation arise in the Mobile space, and subsequently get locked down (or at least exposed). When you install a new App on your Phone, you are able to review the specific functions of that Phone which the App needs to access.

Sadly, this transparent impact assessment is missing from most IoT deployments. Once the “Agent” or “Edge” Software is installed, it may pass a Validation/Verification cycle, but it will likely never ask for specific access to subsystems. Rather, the Agent/Edge code will simply access what files or functions it requires.

This scenario was recently reviewed in detail via an IoT Security Paper, wherein the Samsung SmartThings Platform exposed Application Privilege control via its Cloud Platform, but had significant Overprivileging by default which enabled a series of exploits.

When it comes to locking down this type of potential exploit, I will go back to a model which was used back in my Axeda days, which was focused on Remote Monitoring and Management. In this case the ability to assign “Dynamic Groups” was added to allow for a fluid set of Capabilities and Visibility that mutated based on the condition of a Device. In this scenario, a Device which is in an “Error” State enters a Group where the Field Service Engineers can access almost all functions on the Device.

If we apply this approach to the Well Head Setting, we would not enable a Field Service Engineer (FSE) to access or manipulate the Well Head unless certain conditions have been met. The FSE would have very limited monitor-only capabilities when the Well Head is in healthy operating mode, and would need to have the state of the Device change (either due to a local trigger, or a remote request) in order to expand his access to the Well Head and its core functions.

APIs and External Integrations

The final Tier of the IoT Stack involves the programmatic access of Device Data and Capabilities through the use of Web Services and APIs. As IoT Solutions are inherently Heterogeneous, we find that most IoT Solutions are comprised of multiple Applications and Platforms in the Cloud.

We won’t address the standard User & API Key management functions, as they are well addressed via existing Libraries and Technologies. Rather, we will focus on the uniquely “IoT” Use Cases which present possible Attack Vectors. First off, let’s take a look at the primary Use Case for IoT Cloud Platforms – Data Extraction.

Data Extraction

Not all Platforms will have the capability to analyze all of the Data which is flowing in from all of the Devices deployed in the Fleet. In fact, Data Extraction from the Cloud system out to a separate Analytics system or Data Lake is one of the first integrations that we see in Production-scale IoT Deployments.

There are three basic styles to Data Extraction:

- Row by Row extraction: A REST call is made to extract N rows of Data based on query criteria such as a date range

- Bulk Export: A call is made to extract a large file with the sum of many rows of Data in a single export

- Real-Time Stream: As Data is in received, it is streamed out to an external system via the use of a Message Queue

Interestingly, there are security impacts to each of these approaches, but we will view the two highest risk, which are Row by Row and Real-Time Stream.

1) Row By Row DOS

The cost of querying a Database for Time Series Data based on Date ranges is very small with modern DB architectures. However, this data needs to be loaded into Memory on the Cloud host, it needs to be Serialized into a format the requesting Client can understand, and it needs to be held in Memory until the Client is done reading.

A simple strategy for exploiting this Attack Vector is to request as large a Date range as possible, with the largest Row Count, across as many Clients as possible, and then read that Data out as S L O W L Y as possible. This will have the net effect of filling up the Cloud hosts’ memory with records which are waiting for the Client to receive at the same time that the Client occupies numerous open Connections.

The unfortunate thing about this Attack is that I have seen it! Unintentionally, of course, but it has occurred in a Production environment without any malicious intent. The best way to lock this down is to limit the number of parallel requests from a given Appkey/User, and to limit the # of requests over time by instituting a “cool down” timer between requests if the request frequency is too high.

2) Integration via MQ

While Message Queues remain the go-to strategy for decoupling systems via Producer/Consumer relationships, it can lead to unintentional byproducts which can in turn act as a DOS (or even take a Cloud instance down).

The primary assumption in the MQ contract is that the Consumers will Consume at an equal or faster average rate than the Producer. When this becomes imbalanced, the Queue will begin to back up. No Queue, no matter how large the Filesystem backup, is limitless.

I have witnessed this in Production when a set of Consumers suddenly went offline due to a set of circumstances unrelated to the Cloud system itself. And so the Queue began to fill. Day by Day, with notifications sent to the Consumer. Eventually the Filesystem allocated to the MQ VM filled up, with the behavior on the MQ server being to simply hang on new message enqueue operations! This was the worst scenario, since it meant that the MQ didn’t seem to be offline, it seemed to be up, until a long timeout was reached. This eventually tied up all of the Threads available in the Cloud instance, and the instance became unresponsive.

The best approach for limiting the possible DOS blowback of MQ based integrations is to leverage aggressive timeouts, and to treat the Data as ephemeral in the event of an extended drop-off in Consumption.

Conclusion

We reviewed a number of Cloud-side Security vulnerabilities in IoT as part of this series of IoT Security Blog Posts, and I will apologize in retrospect for the length! It is a challenge to address this topic in anything less than a series of Whitepapers, but if the Reader can walk away with an appreciation of the different Attack Vectors and Attack Surfaces which are introduced in IoT, then I feel that I have done my job here.

One additional closing note of caution. There are solutions to every problem that arises in IoT, just as there are in the Mobile space, the IT space, the IaaS space and so on. Be wary of the seemingly endless string of “IoT Device Hacked!” articles, and do not let the fear of new Risks limit your adoption of game-changing Technology.

If you have any questions or comments on this series, please contact us.